Gaussian processes for regression and classification

gausspr.Rdgausspr is an implementation of Gaussian processes

for classification and regression.

# S4 method for class 'formula'

gausspr(x, data=NULL, ..., subset, na.action = na.omit, scaled = TRUE)

# S4 method for class 'vector'

gausspr(x,...)

# S4 method for class 'matrix'

gausspr(x, y, scaled = TRUE, type= NULL, kernel="rbfdot",

kpar="automatic", var=1, variance.model = FALSE, tol=0.0005,

cross=0, fit=TRUE, ... , subset, na.action = na.omit)Arguments

- x

a symbolic description of the model to be fit or a matrix or vector when a formula interface is not used. When not using a formula x is a matrix or vector containing the variables in the model

- data

an optional data frame containing the variables in the model. By default the variables are taken from the environment which `gausspr' is called from.

- y

a response vector with one label for each row/component of

x. Can be either a factor (for classification tasks) or a numeric vector (for regression).- type

Type of problem. Either "classification" or "regression". Depending on whether

yis a factor or not, the default setting fortypeisclassificationorregression, respectively, but can be overwritten by setting an explicit value.- scaled

A logical vector indicating the variables to be scaled. If

scaledis of length 1, the value is recycled as many times as needed and all non-binary variables are scaled. Per default, data are scaled internally (bothxandyvariables) to zero mean and unit variance. The center and scale values are returned and used for later predictions.- kernel

the kernel function used in training and predicting. This parameter can be set to any function, of class kernel, which computes a dot product between two vector arguments. kernlab provides the most popular kernel functions which can be used by setting the kernel parameter to the following strings:

rbfdotRadial Basis kernel function "Gaussian"polydotPolynomial kernel functionvanilladotLinear kernel functiontanhdotHyperbolic tangent kernel functionlaplacedotLaplacian kernel functionbesseldotBessel kernel functionanovadotANOVA RBF kernel functionsplinedotSpline kernel

The kernel parameter can also be set to a user defined function of class kernel by passing the function name as an argument.

- kpar

the list of hyper-parameters (kernel parameters). This is a list which contains the parameters to be used with the kernel function. Valid parameters for existing kernels are :

sigmainverse kernel width for the Radial Basis kernel function "rbfdot" and the Laplacian kernel "laplacedot".degree, scale, offsetfor the Polynomial kernel "polydot"scale, offsetfor the Hyperbolic tangent kernel function "tanhdot"sigma, order, degreefor the Bessel kernel "besseldot".sigma, degreefor the ANOVA kernel "anovadot".

Hyper-parameters for user defined kernels can be passed through the kpar parameter as well.

- var

the initial noise variance, (only for regression) (default : 0.001)

- variance.model

build model for variance or standard deviation estimation (only for regression) (default : FALSE)

- tol

tolerance of termination criterion (default: 0.001)

- fit

indicates whether the fitted values should be computed and included in the model or not (default: 'TRUE')

- cross

if a integer value k>0 is specified, a k-fold cross validation on the training data is performed to assess the quality of the model: the Mean Squared Error for regression

- subset

An index vector specifying the cases to be used in the training sample. (NOTE: If given, this argument must be named.)

- na.action

A function to specify the action to be taken if

NAs are found. The default action isna.omit, which leads to rejection of cases with missing values on any required variable. An alternative isna.fail, which causes an error ifNAcases are found. (NOTE: If given, this argument must be named.)- ...

additional parameters

Details

A Gaussian process is specified by a mean and a covariance function.

The mean is a function of \(x\) (which is often the zero function), and

the covariance

is a function \(C(x,x')\) which expresses the expected covariance between the

value of the function \(y\) at the points \(x\) and \(x'\).

The actual function \(y(x)\) in any data modeling problem is assumed to be

a single sample from this Gaussian distribution.

Laplace approximation is used for the parameter estimation in gaussian

processes for classification.

The predict function can return class probabilities for

classification problems by setting the type parameter to "probabilities".

For the regression setting the type parameter to "variance" or "sdeviation" returns the estimated variance or standard deviation at each predicted point.

Value

An S4 object of class "gausspr" containing the fitted model along with information. Accessor functions can be used to access the slots of the object which include :

- alpha

The resulting model parameters

- error

Training error (if fit == TRUE)

References

C. K. I. Williams and D. Barber

Bayesian classification with Gaussian processes.

IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(12):1342-1351, 1998

https://homepages.inf.ed.ac.uk/ckiw/postscript/pami_final.ps.gz

See also

Examples

# train model

data(iris)

test <- gausspr(Species~.,data=iris,var=2)

#> Using automatic sigma estimation (sigest) for RBF or laplace kernel

test

#> Gaussian Processes object of class "gausspr"

#> Problem type: classification

#>

#> Gaussian Radial Basis kernel function.

#> Hyperparameter : sigma = 0.529106206641111

#>

#> Number of training instances learned : 150

#> Train error : 0.033333333

alpha(test)

#> [[1]]

#> [,1]

#> [1,] 0.05953019

#> [2,] 0.10423156

#> [3,] 0.07122828

#> [4,] 0.08793493

#> [5,] 0.06415518

#> [6,] 0.11155535

#> [7,] 0.07249219

#> [8,] 0.05791013

#> [9,] 0.14699315

#> [10,] 0.08367653

#> [11,] 0.08141993

#> [12,] 0.06125499

#> [13,] 0.10222673

#> [14,] 0.13677885

#> [15,] 0.16731667

#> [16,] 0.27277724

#> [17,] 0.11036835

#> [18,] 0.06008251

#> [19,] 0.12357542

#> [20,] 0.08552882

#> [21,] 0.08168819

#> [22,] 0.07572896

#> [23,] 0.09448798

#> [24,] 0.08340658

#> [25,] 0.06695640

#> [26,] 0.11348019

#> [27,] 0.06369102

#> [28,] 0.06248458

#> [29,] 0.06332022

#> [30,] 0.07274323

#> [31,] 0.08364382

#> [32,] 0.08532402

#> [33,] 0.15940008

#> [34,] 0.18825818

#> [35,] 0.08298276

#> [36,] 0.07058085

#> [37,] 0.08541740

#> [38,] 0.06926163

#> [39,] 0.12105261

#> [40,] 0.05968481

#> [41,] 0.05970160

#> [42,] 0.38866635

#> [43,] 0.09435220

#> [44,] 0.08132005

#> [45,] 0.09524281

#> [46,] 0.10576207

#> [47,] 0.08620120

#> [48,] 0.07609387

#> [49,] 0.07607993

#> [50,] 0.06119649

#> [51,] -0.17459077

#> [52,] -0.10336501

#> [53,] -0.14177528

#> [54,] -0.11015472

#> [55,] -0.07922921

#> [56,] -0.06285176

#> [57,] -0.13238475

#> [58,] -0.20006531

#> [59,] -0.08649088

#> [60,] -0.10961975

#> [61,] -0.25856240

#> [62,] -0.07091286

#> [63,] -0.15677950

#> [64,] -0.06015451

#> [65,] -0.09585989

#> [66,] -0.10954922

#> [67,] -0.08914808

#> [68,] -0.07465137

#> [69,] -0.17908721

#> [70,] -0.08036263

#> [71,] -0.13187755

#> [72,] -0.06025932

#> [73,] -0.10860430

#> [74,] -0.06281064

#> [75,] -0.07023190

#> [76,] -0.08809137

#> [77,] -0.11810865

#> [78,] -0.11872573

#> [79,] -0.05987711

#> [80,] -0.09371140

#> [81,] -0.09634521

#> [82,] -0.10557496

#> [83,] -0.06367108

#> [84,] -0.08267656

#> [85,] -0.11461435

#> [86,] -0.17891554

#> [87,] -0.10977072

#> [88,] -0.14316767

#> [89,] -0.09409462

#> [90,] -0.07918276

#> [91,] -0.07551086

#> [92,] -0.06550866

#> [93,] -0.06451955

#> [94,] -0.18820495

#> [95,] -0.06496171

#> [96,] -0.08901167

#> [97,] -0.06937240

#> [98,] -0.06139823

#> [99,] -0.17489935

#> [100,] -0.06320657

#>

#> [[2]]

#> [,1]

#> [1,] 0.05972581

#> [2,] 0.09617963

#> [3,] 0.07096615

#> [4,] 0.08553534

#> [5,] 0.06492765

#> [6,] 0.11102572

#> [7,] 0.07320067

#> [8,] 0.05756567

#> [9,] 0.14080986

#> [10,] 0.08044637

#> [11,] 0.08139313

#> [12,] 0.06137213

#> [13,] 0.09767654

#> [14,] 0.13768640

#> [15,] 0.16750756

#> [16,] 0.27275142

#> [17,] 0.11049611

#> [18,] 0.05975890

#> [19,] 0.12195284

#> [20,] 0.08597985

#> [21,] 0.07788526

#> [22,] 0.07562788

#> [23,] 0.09632950

#> [24,] 0.07452214

#> [25,] 0.06618082

#> [26,] 0.10068356

#> [27,] 0.06133711

#> [28,] 0.06215583

#> [29,] 0.06255110

#> [30,] 0.07116502

#> [31,] 0.07892564

#> [32,] 0.07974776

#> [33,] 0.15991714

#> [34,] 0.18850112

#> [35,] 0.07811024

#> [36,] 0.06922025

#> [37,] 0.08431046

#> [38,] 0.07050831

#> [39,] 0.11898173

#> [40,] 0.05898149

#> [41,] 0.05992168

#> [42,] 0.34288287

#> [43,] 0.09527906

#> [44,] 0.07780336

#> [45,] 0.09461808

#> [46,] 0.09647058

#> [47,] 0.08677726

#> [48,] 0.07588213

#> [49,] 0.07631021

#> [50,] 0.06029301

#> [51,] -0.12989443

#> [52,] -0.10395084

#> [53,] -0.07471447

#> [54,] -0.06706811

#> [55,] -0.06251820

#> [56,] -0.14334399

#> [57,] -0.30013869

#> [58,] -0.11242873

#> [59,] -0.13600557

#> [60,] -0.19835992

#> [61,] -0.07860665

#> [62,] -0.07503775

#> [63,] -0.06066301

#> [64,] -0.15124178

#> [65,] -0.13222222

#> [66,] -0.08292265

#> [67,] -0.06428002

#> [68,] -0.27659590

#> [69,] -0.24522882

#> [70,] -0.25263484

#> [71,] -0.07926294

#> [72,] -0.12832004

#> [73,] -0.18073073

#> [74,] -0.08433443

#> [75,] -0.08523084

#> [76,] -0.10686128

#> [77,] -0.08059267

#> [78,] -0.08569920

#> [79,] -0.06635275

#> [80,] -0.11564114

#> [81,] -0.12355582

#> [82,] -0.29313370

#> [83,] -0.07078273

#> [84,] -0.09943879

#> [85,] -0.14318342

#> [86,] -0.15661716

#> [87,] -0.13778959

#> [88,] -0.07297032

#> [89,] -0.09657218

#> [90,] -0.06785501

#> [91,] -0.07527288

#> [92,] -0.08171501

#> [93,] -0.10395084

#> [94,] -0.07813492

#> [95,] -0.10689768

#> [96,] -0.06985243

#> [97,] -0.11980275

#> [98,] -0.05982203

#> [99,] -0.14142707

#> [100,] -0.09902571

#>

#> [[3]]

#> [,1]

#> [1,] 0.30927486

#> [2,] 0.22878128

#> [3,] 0.39116783

#> [4,] 0.18548695

#> [5,] 0.31130945

#> [6,] 0.14339987

#> [7,] 0.32198474

#> [8,] 0.18417896

#> [9,] 0.19002534

#> [10,] 0.17593840

#> [11,] 0.26717803

#> [12,] 0.16792058

#> [13,] 0.18807198

#> [14,] 0.20667065

#> [15,] 0.09891870

#> [16,] 0.20062403

#> [17,] 0.21547747

#> [18,] 0.08009908

#> [19,] 0.36987717

#> [20,] 0.10315632

#> [21,] 0.46873809

#> [22,] 0.10301388

#> [23,] 0.43068784

#> [24,] 0.14222405

#> [25,] 0.13182009

#> [26,] 0.18984781

#> [27,] 0.33668588

#> [28,] 0.58504334

#> [29,] 0.22290857

#> [30,] 0.08154140

#> [31,] 0.12057968

#> [32,] 0.11340382

#> [33,] 0.08743554

#> [34,] 0.51857308

#> [35,] 0.23171783

#> [36,] 0.28675758

#> [37,] 0.30629661

#> [38,] 0.26901943

#> [39,] 0.11605783

#> [40,] 0.14377505

#> [41,] 0.13964783

#> [42,] 0.17788561

#> [43,] 0.10161207

#> [44,] 0.18169954

#> [45,] 0.12761458

#> [46,] 0.09931844

#> [47,] 0.11194291

#> [48,] 0.11870078

#> [49,] 0.15032653

#> [50,] 0.10875415

#> [51,] -0.12231609

#> [52,] -0.26552141

#> [53,] -0.09857308

#> [54,] -0.19934200

#> [55,] -0.07526251

#> [56,] -0.14339039

#> [57,] -0.56278359

#> [58,] -0.15525549

#> [59,] -0.21274973

#> [60,] -0.19645539

#> [61,] -0.23070629

#> [62,] -0.18035760

#> [63,] -0.10460057

#> [64,] -0.27037072

#> [65,] -0.13427798

#> [66,] -0.12044289

#> [67,] -0.22161360

#> [68,] -0.27628518

#> [69,] -0.22981812

#> [70,] -0.56291004

#> [71,] -0.09535087

#> [72,] -0.28950875

#> [73,] -0.18031267

#> [74,] -0.32679041

#> [75,] -0.14180909

#> [76,] -0.19057002

#> [77,] -0.37353110

#> [78,] -0.41058698

#> [79,] -0.09221272

#> [80,] -0.27587635

#> [81,] -0.15701440

#> [82,] -0.29762557

#> [83,] -0.08101084

#> [84,] -0.56671657

#> [85,] -0.48740357

#> [86,] -0.15582521

#> [87,] -0.16284584

#> [88,] -0.25693085

#> [89,] -0.45396052

#> [90,] -0.12626507

#> [91,] -0.07914211

#> [92,] -0.12085856

#> [93,] -0.26552141

#> [94,] -0.08747732

#> [95,] -0.10748613

#> [96,] -0.09615312

#> [97,] -0.26806593

#> [98,] -0.16366661

#> [99,] -0.19910030

#> [100,] -0.38615021

#>

# predict on the training set

predict(test,iris[,-5])

#> [1] setosa setosa setosa setosa setosa setosa

#> [7] setosa setosa setosa setosa setosa setosa

#> [13] setosa setosa setosa setosa setosa setosa

#> [19] setosa setosa setosa setosa setosa setosa

#> [25] setosa setosa setosa setosa setosa setosa

#> [31] setosa setosa setosa setosa setosa setosa

#> [37] setosa setosa setosa setosa setosa setosa

#> [43] setosa setosa setosa setosa setosa setosa

#> [49] setosa setosa versicolor versicolor versicolor versicolor

#> [55] versicolor versicolor versicolor versicolor versicolor versicolor

#> [61] versicolor versicolor versicolor versicolor versicolor versicolor

#> [67] versicolor versicolor versicolor versicolor versicolor versicolor

#> [73] versicolor versicolor versicolor versicolor versicolor virginica

#> [79] versicolor versicolor versicolor versicolor versicolor virginica

#> [85] versicolor versicolor versicolor versicolor versicolor versicolor

#> [91] versicolor versicolor versicolor versicolor versicolor versicolor

#> [97] versicolor versicolor versicolor versicolor virginica virginica

#> [103] virginica virginica virginica virginica versicolor virginica

#> [109] virginica virginica virginica virginica virginica virginica

#> [115] virginica virginica virginica virginica virginica versicolor

#> [121] virginica virginica virginica virginica virginica virginica

#> [127] virginica virginica virginica virginica virginica virginica

#> [133] virginica versicolor virginica virginica virginica virginica

#> [139] virginica virginica virginica virginica virginica virginica

#> [145] virginica virginica virginica virginica virginica virginica

#> Levels: setosa versicolor virginica

# class probabilities

predict(test, iris[,-5], type="probabilities")

#> setosa versicolor virginica

#> [1,] 0.88753138 0.05689392 0.05557470

#> [2,] 0.81804651 0.09720401 0.08474947

#> [3,] 0.86756972 0.06751595 0.06491433

#> [4,] 0.84050111 0.08247813 0.07702076

#> [5,] 0.87894415 0.06078681 0.06026903

#> [6,] 0.79965138 0.10111191 0.09923671

#> [7,] 0.86425875 0.06843377 0.06730748

#> [8,] 0.89074111 0.05567829 0.05358060

#> [9,] 0.74851740 0.13117479 0.12030781

#> [10,] 0.84854855 0.07895822 0.07249323

#> [11,] 0.84953381 0.07595354 0.07451265

#> [12,] 0.88449170 0.05858476 0.05692354

#> [13,] 0.81836322 0.09502539 0.08661138

#> [14,] 0.75865618 0.12194438 0.11939944

#> [15,] 0.71320242 0.14373450 0.14306307

#> [16,] 0.57138470 0.21444903 0.21416627

#> [17,] 0.80103688 0.09996755 0.09899557

#> [18,] 0.88701602 0.05747737 0.05550661

#> [19,] 0.78126121 0.11121707 0.10752172

#> [20,] 0.84203262 0.07929291 0.07867447

#> [21,] 0.85234683 0.07728143 0.07037173

#> [22,] 0.85946029 0.07102509 0.06951462

#> [23,] 0.82578652 0.08678047 0.08743301

#> [24,] 0.85391271 0.07962121 0.06646608

#> [25,] 0.87520667 0.06385449 0.06093884

#> [26,] 0.80686531 0.10564173 0.08749295

#> [27,] 0.88234622 0.06120620 0.05644758

#> [28,] 0.88281316 0.05962563 0.05756120

#> [29,] 0.88164097 0.06059697 0.05776207

#> [30,] 0.86607502 0.06904350 0.06488148

#> [31,] 0.84994953 0.07908435 0.07096612

#> [32,] 0.84771409 0.08072077 0.07156514

#> [33,] 0.72464676 0.13766751 0.13768573

#> [34,] 0.68297043 0.15859697 0.15843260

#> [35,] 0.85123366 0.07854839 0.07021796

#> [36,] 0.86962570 0.06715710 0.06321720

#> [37,] 0.84365601 0.07982842 0.07651557

#> [38,] 0.86944133 0.06527738 0.06528129

#> [39,] 0.78571518 0.11000525 0.10427957

#> [40,] 0.88794365 0.05733943 0.05471692

#> [41,] 0.88718363 0.05705390 0.05576246

#> [42,] 0.46364351 0.29971299 0.23664351

#> [43,] 0.82678031 0.08735922 0.08586046

#> [44,] 0.85268723 0.07680091 0.07051185

#> [45,] 0.82658639 0.08775748 0.08565613

#> [46,] 0.81655460 0.09861712 0.08482828

#> [47,] 0.84077499 0.07983660 0.07938841

#> [48,] 0.85897528 0.07181786 0.06920685

#> [49,] 0.85856332 0.07126065 0.07017603

#> [50,] 0.88557947 0.05871736 0.05570317

#> [51,] 0.10110982 0.61182979 0.28706039

#> [52,] 0.06483920 0.71657941 0.21858139

#> [53,] 0.07477925 0.55910176 0.36611900

#> [54,] 0.08710305 0.74138331 0.17151364

#> [55,] 0.04869399 0.65379151 0.29751450

#> [56,] 0.04698317 0.81406068 0.13895615

#> [57,] 0.07857650 0.62036876 0.30105474

#> [58,] 0.16305052 0.67782774 0.15912174

#> [59,] 0.05580034 0.76073063 0.18346903

#> [60,] 0.08577546 0.75028550 0.16393905

#> [61,] 0.19990090 0.58380190 0.21629721

#> [62,] 0.04990982 0.78782482 0.16226536

#> [63,] 0.12037728 0.70788700 0.17173572

#> [64,] 0.04105089 0.75944985 0.19949926

#> [65,] 0.07422642 0.82734342 0.09843016

#> [66,] 0.06881821 0.73823579 0.19294601

#> [67,] 0.06378071 0.73205920 0.20416008

#> [68,] 0.05788726 0.86123643 0.08087631

#> [69,] 0.12025355 0.55409712 0.32564933

#> [70,] 0.06437623 0.83491275 0.10071102

#> [71,] 0.07423431 0.49170068 0.43406501

#> [72,] 0.04351574 0.85456883 0.10191544

#> [73,] 0.06729670 0.53157726 0.40112604

#> [74,] 0.04467682 0.81675664 0.13856654

#> [75,] 0.04766142 0.82299872 0.12933986

#> [76,] 0.05612397 0.76042407 0.18345196

#> [77,] 0.06865349 0.61448731 0.31685920

#> [78,] 0.05125044 0.39336785 0.55538171

#> [79,] 0.04130370 0.74408121 0.21461510

#> [80,] 0.07554041 0.84212228 0.08233731

#> [81,] 0.07818216 0.80643711 0.11538074

#> [82,] 0.08629694 0.80481440 0.10888866

#> [83,] 0.04876898 0.86419728 0.08703374

#> [84,] 0.04922320 0.45918647 0.49159032

#> [85,] 0.08281562 0.70169299 0.21549139

#> [86,] 0.11493440 0.62319751 0.26186810

#> [87,] 0.06308906 0.64619318 0.29071776

#> [88,] 0.10082474 0.65393438 0.24524088

#> [89,] 0.07077257 0.81529078 0.11393665

#> [90,] 0.06292248 0.79994819 0.13712933

#> [91,] 0.05918638 0.80686645 0.13394717

#> [92,] 0.04450154 0.78322170 0.17227676

#> [93,] 0.04975310 0.85037829 0.09986861

#> [94,] 0.15352388 0.68817670 0.15829943

#> [95,] 0.05002657 0.82624140 0.12373203

#> [96,] 0.06687175 0.83397300 0.09915526

#> [97,] 0.05177949 0.83831619 0.10990432

#> [98,] 0.04275097 0.84038614 0.11686289

#> [99,] 0.14416240 0.72044262 0.13539498

#> [100,] 0.04779154 0.84548606 0.10672240

#> [101,] 0.10091766 0.11901088 0.78007145

#> [102,] 0.05972819 0.25399054 0.68628127

#> [103,] 0.05912038 0.09687096 0.84400866

#> [104,] 0.04299254 0.19310186 0.76390560

#> [105,] 0.04565676 0.07637838 0.87796485

#> [106,] 0.11721845 0.13230652 0.75047502

#> [107,] 0.14196352 0.48855382 0.36948266

#> [108,] 0.08851875 0.14580241 0.76567885

#> [109,] 0.09314320 0.19988193 0.70697487

#> [110,] 0.15888319 0.17031521 0.67080160

#> [111,] 0.05527745 0.21971480 0.72500775

#> [112,] 0.04844443 0.17492314 0.77663243

#> [113,] 0.04527709 0.10301103 0.85171188

#> [114,] 0.08951830 0.25446597 0.65601573

#> [115,] 0.09086865 0.13341348 0.77571787

#> [116,] 0.06140704 0.11790702 0.82068593

#> [117,] 0.04310312 0.21362747 0.74326941

#> [118,] 0.21382096 0.21927400 0.56690504

#> [119,] 0.19131600 0.19225447 0.61642952

#> [120,] 0.12029895 0.49797001 0.38173105

#> [121,] 0.06297702 0.09403547 0.84298751

#> [122,] 0.07382921 0.27379136 0.65237943

#> [123,] 0.14485751 0.16007734 0.69506516

#> [124,] 0.04832469 0.31283608 0.63883922

#> [125,] 0.06562818 0.13602609 0.79834573

#> [126,] 0.08380411 0.17668701 0.73950887

#> [127,] 0.04407405 0.35827218 0.59765377

#> [128,] 0.04673427 0.39228731 0.56097842

#> [129,] 0.04580147 0.09249720 0.86170134

#> [130,] 0.08564732 0.25258836 0.66176432

#> [131,] 0.09780859 0.14643662 0.75575479

#> [132,] 0.22357610 0.23245555 0.54396835

#> [133,] 0.04984738 0.08246936 0.86768327

#> [134,] 0.04566665 0.54084590 0.41348745

#> [135,] 0.07011815 0.45523128 0.47465058

#> [136,] 0.12766906 0.14154702 0.73078392

#> [137,] 0.10502129 0.15290570 0.74207301

#> [138,] 0.04834755 0.24607032 0.70558213

#> [139,] 0.04987056 0.43227099 0.51785845

#> [140,] 0.05200719 0.12251317 0.82547964

#> [141,] 0.05829619 0.08009331 0.86161050

#> [142,] 0.06306076 0.11736013 0.81957912

#> [143,] 0.05972819 0.25399054 0.68628127

#> [144,] 0.06177645 0.08718444 0.85103911

#> [145,] 0.08523930 0.10507985 0.80968085

#> [146,] 0.05211101 0.09557109 0.85231791

#> [147,] 0.07292389 0.25355609 0.67352002

#> [148,] 0.04117433 0.15910760 0.79971808

#> [149,] 0.10421443 0.18441219 0.71137338

#> [150,] 0.05395463 0.36716662 0.57887874

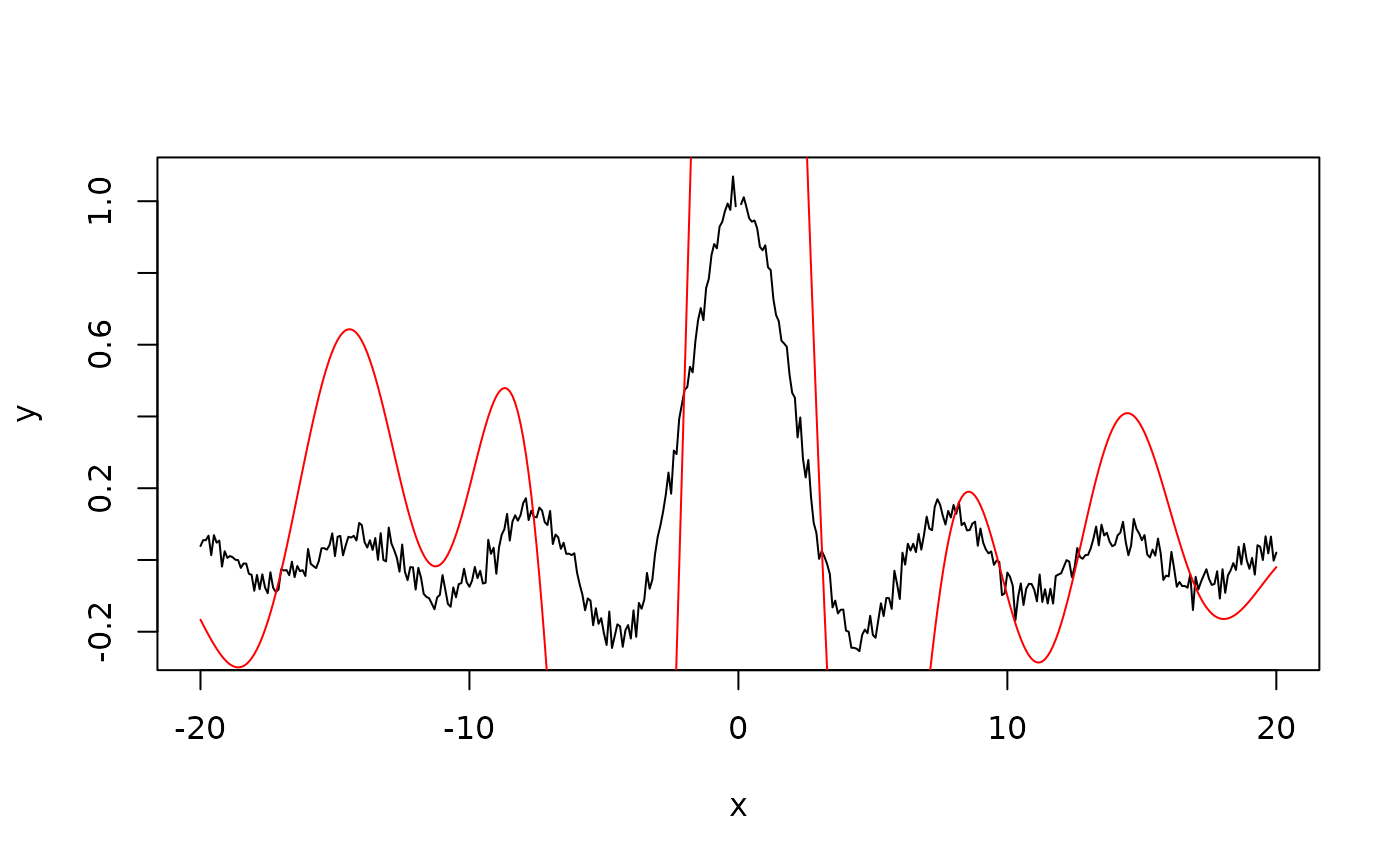

# create regression data

x <- seq(-20,20,0.1)

y <- sin(x)/x + rnorm(401,sd=0.03)

# regression with gaussian processes

foo <- gausspr(x, y)

#> Using automatic sigma estimation (sigest) for RBF or laplace kernel

foo

#> Gaussian Processes object of class "gausspr"

#> Problem type: regression

#>

#> Gaussian Radial Basis kernel function.

#> Hyperparameter : sigma = 18.7314522799961

#>

#> Number of training instances learned : 400

#> Train error : 20.160862847

# predict and plot

ytest <- predict(foo, x)

plot(x, y, type ="l")

lines(x, ytest, col="red")

#predict and variance

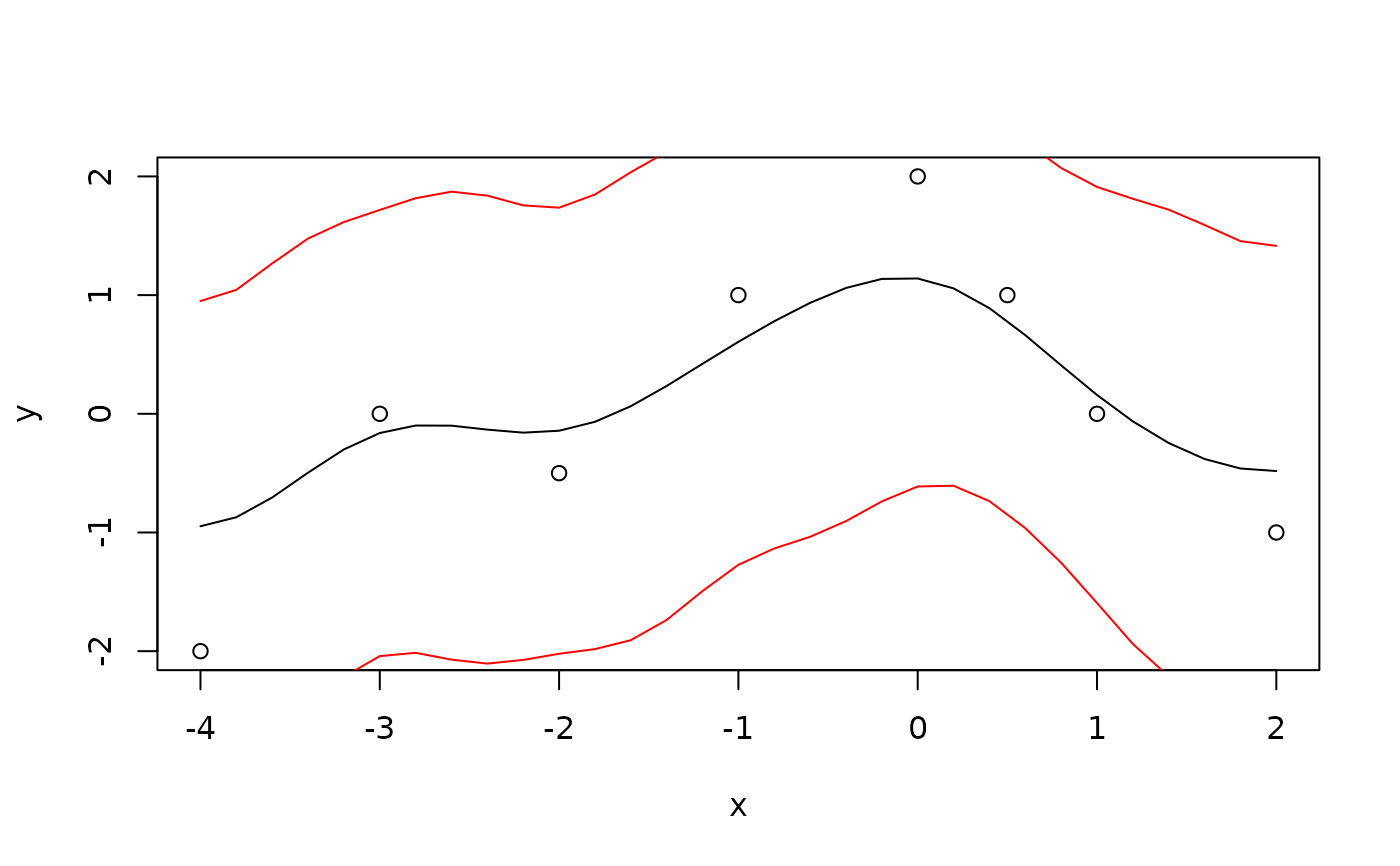

x = c(-4, -3, -2, -1, 0, 0.5, 1, 2)

y = c(-2, 0, -0.5,1, 2, 1, 0, -1)

plot(x,y)

foo2 <- gausspr(x, y, variance.model = TRUE)

#> Using automatic sigma estimation (sigest) for RBF or laplace kernel

xtest <- seq(-4,2,0.2)

lines(xtest, predict(foo2, xtest))

lines(xtest,

predict(foo2, xtest)+2*predict(foo2,xtest, type="sdeviation"),

col="red")

lines(xtest,

predict(foo2, xtest)-2*predict(foo2,xtest, type="sdeviation"),

col="red")

#predict and variance

x = c(-4, -3, -2, -1, 0, 0.5, 1, 2)

y = c(-2, 0, -0.5,1, 2, 1, 0, -1)

plot(x,y)

foo2 <- gausspr(x, y, variance.model = TRUE)

#> Using automatic sigma estimation (sigest) for RBF or laplace kernel

xtest <- seq(-4,2,0.2)

lines(xtest, predict(foo2, xtest))

lines(xtest,

predict(foo2, xtest)+2*predict(foo2,xtest, type="sdeviation"),

col="red")

lines(xtest,

predict(foo2, xtest)-2*predict(foo2,xtest, type="sdeviation"),

col="red")